Real-Time Lighting with Gaussian Splats

Applying traditional dynamic lighting techniques to Gaussian splats.

There's been a lot of interest lately in Gaussian splats

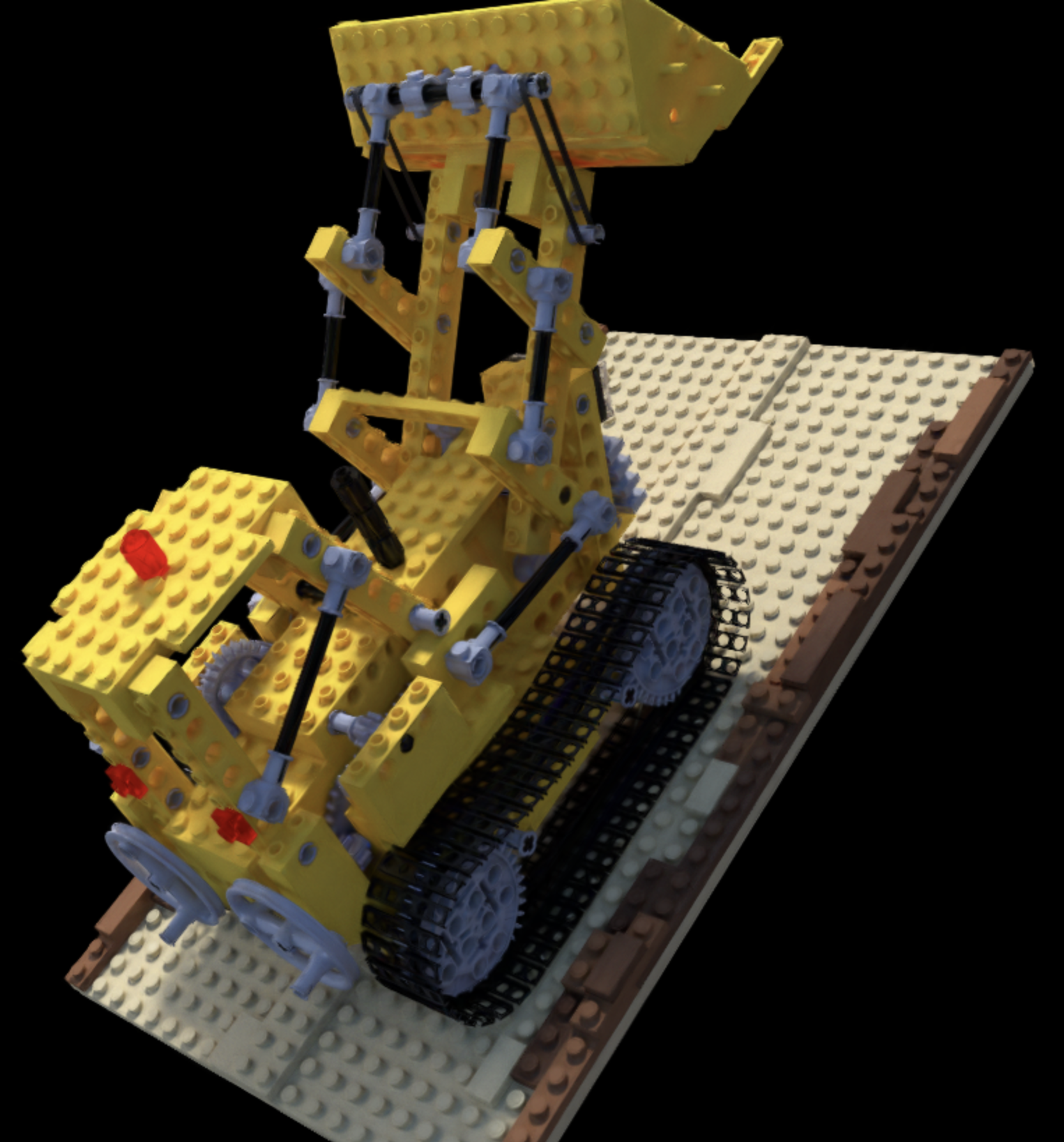

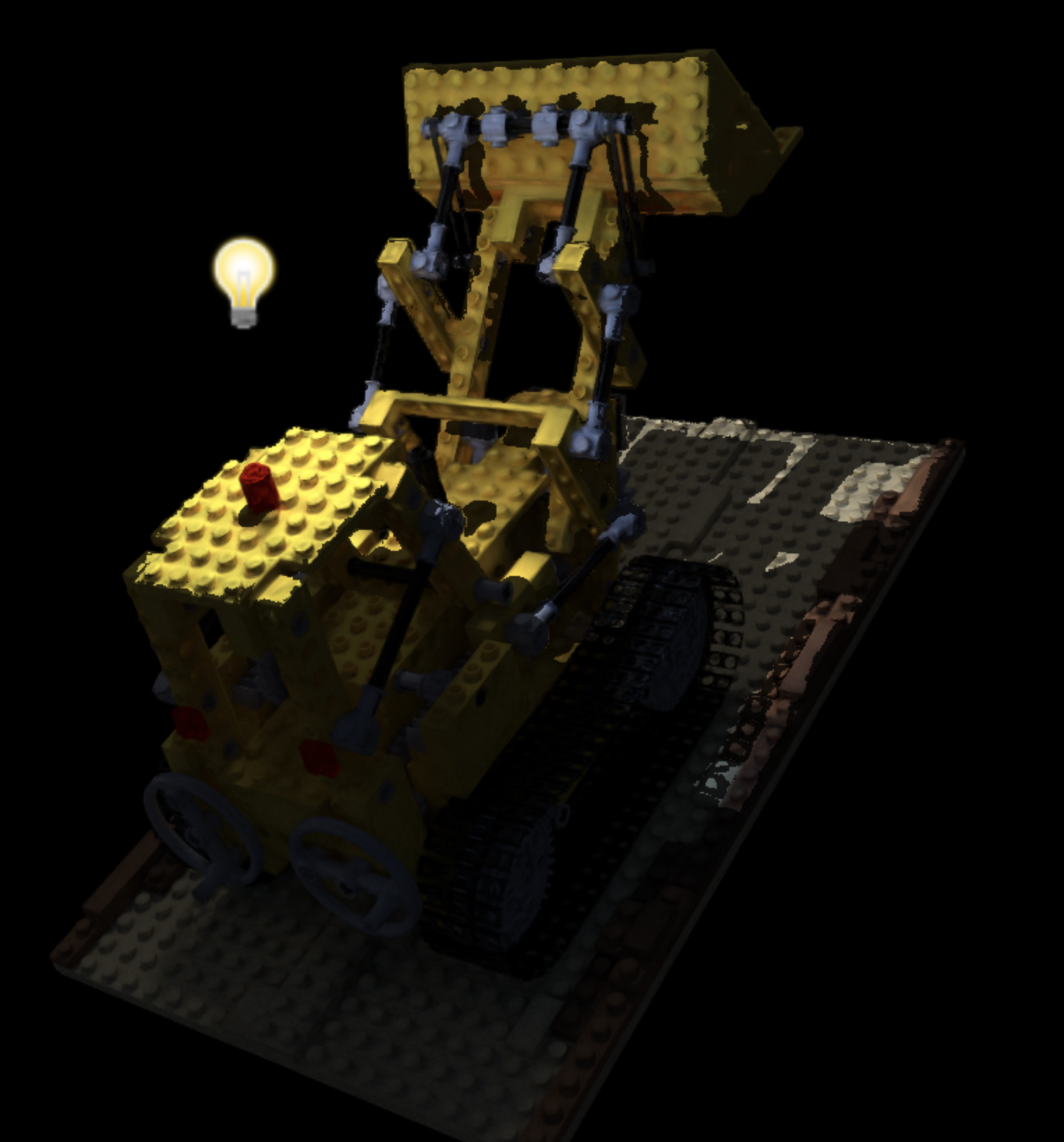

Recently I saw a few-month old tweet about lighting splats in real-time. I thought it was cool how simple the approach was and I couldn't find the source code, so I re-created it in WebGL. Try it out below or click here for fullscreen. You can use your mouse to move the camera or the lights and press M to toggle between lighting mode and no lighting.

The idea is to use rasterization to render splats just like in the original paper, but to recover surface normals from depth, then light the scene using traditional shading and shadow mapping.

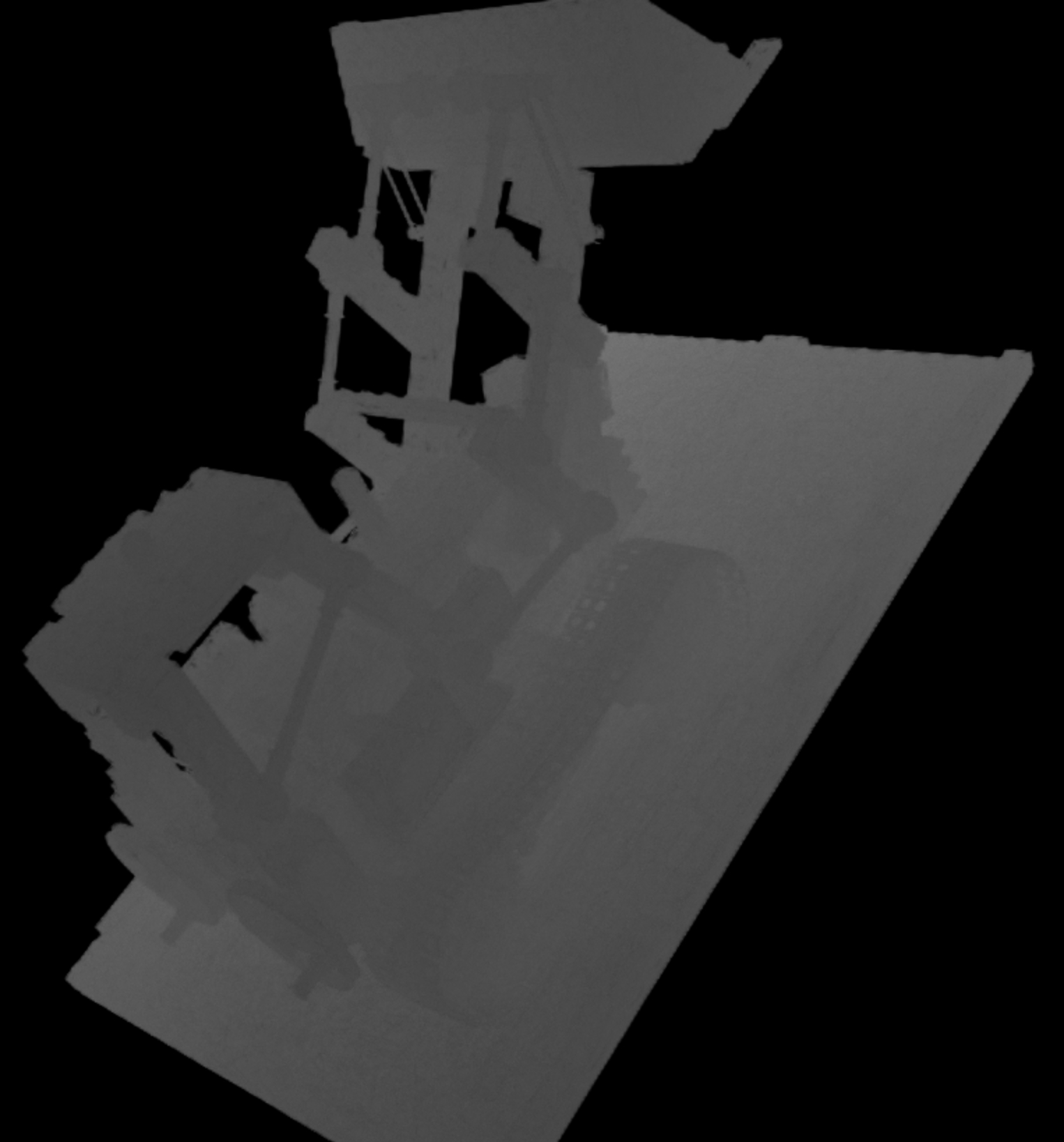

Depth is computed the same way we compute color for each pixel, using the "over" operator to do alpha compositing. Given \( N \) splats with depths \( d_i \) and alphas \( \alpha_i \) ordered front-to-back: $$ D = \displaystyle \sum_{i=1}^N d_i \alpha_i \prod_{j=1}^{i-1} (1-\alpha_j) $$

The formula feels nonphysical because it can theoretically result in a surface in empty space between 2 gaussians, but in practice it captures the rough shapes of hard objects fairly well, even with the simplest implementation possible that uses the depth of each Gaussian's center rather than doing something more complicated to figure out the average depth of the section overlapping a pixel.

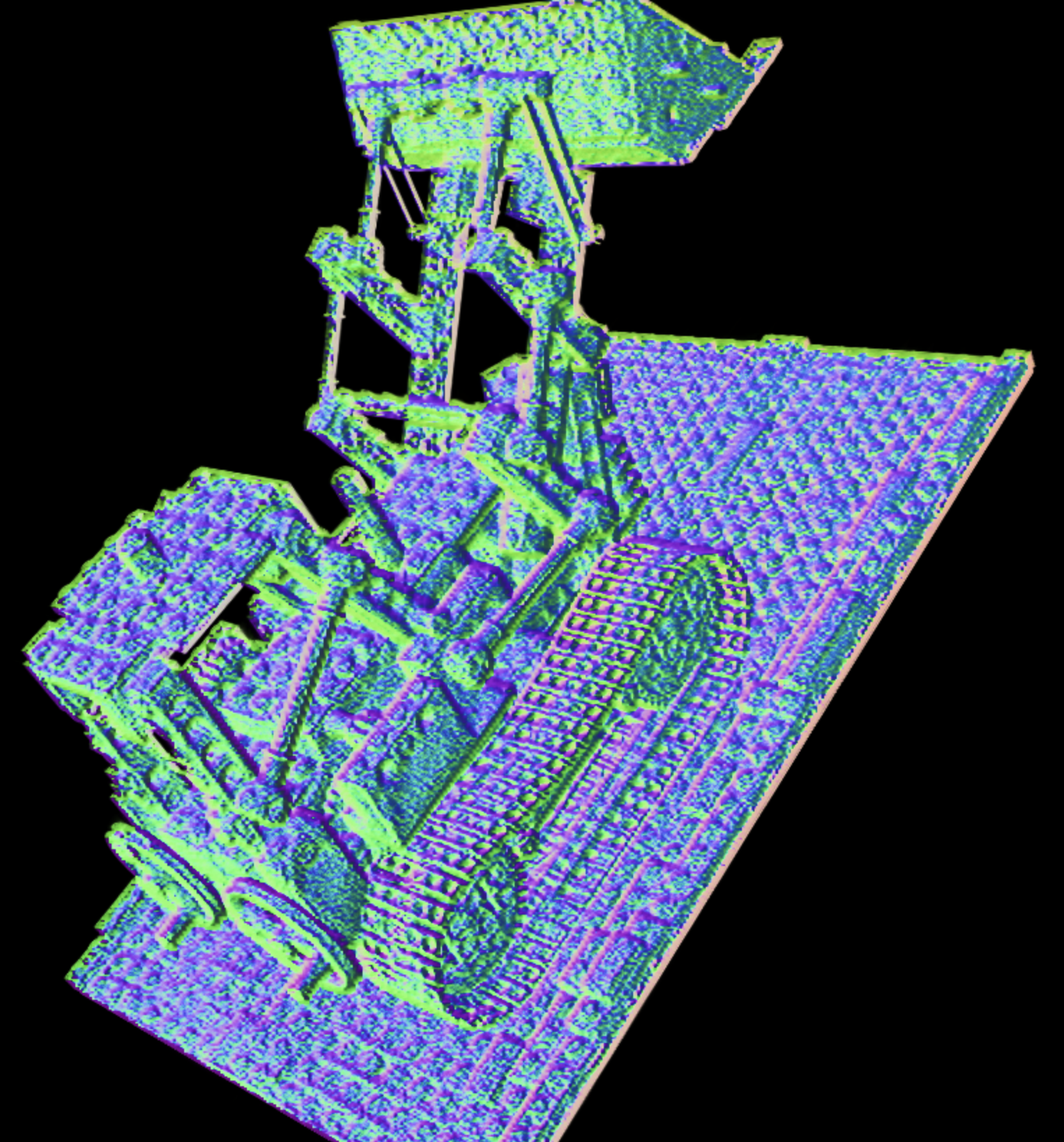

Pseudo-normals are computed for each pixel by reconstructing the world-space points of it and its neighbors \( \mathbf{p}_0, \mathbf{p}_1, \mathbf{p}_2 \), then crossing the resulting pseudo-tangent and bitangent vectors \( \mathbf{p}_1 - \mathbf{p}_0 \times \mathbf{p}_2 - \mathbf{p}_0 \).

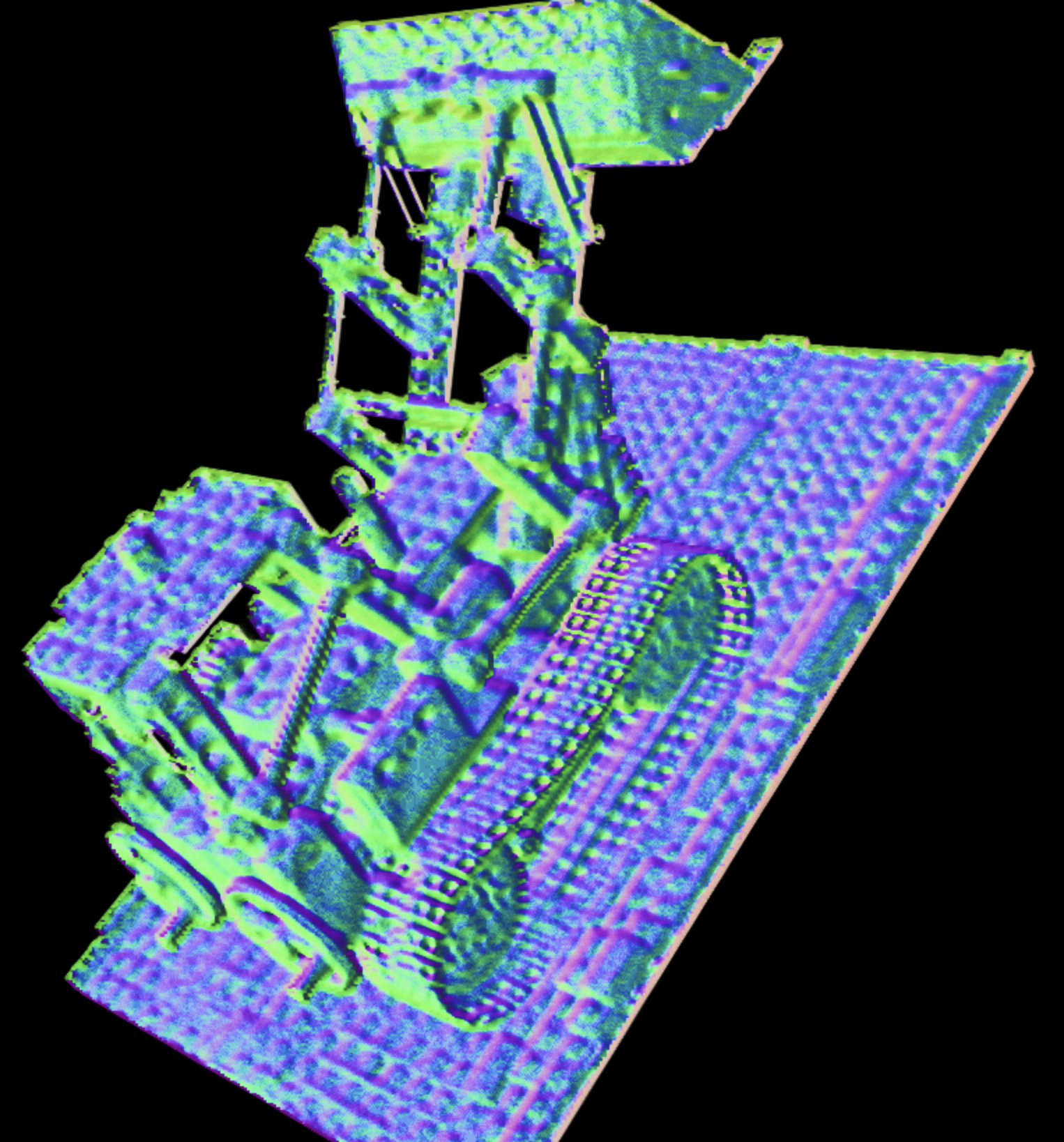

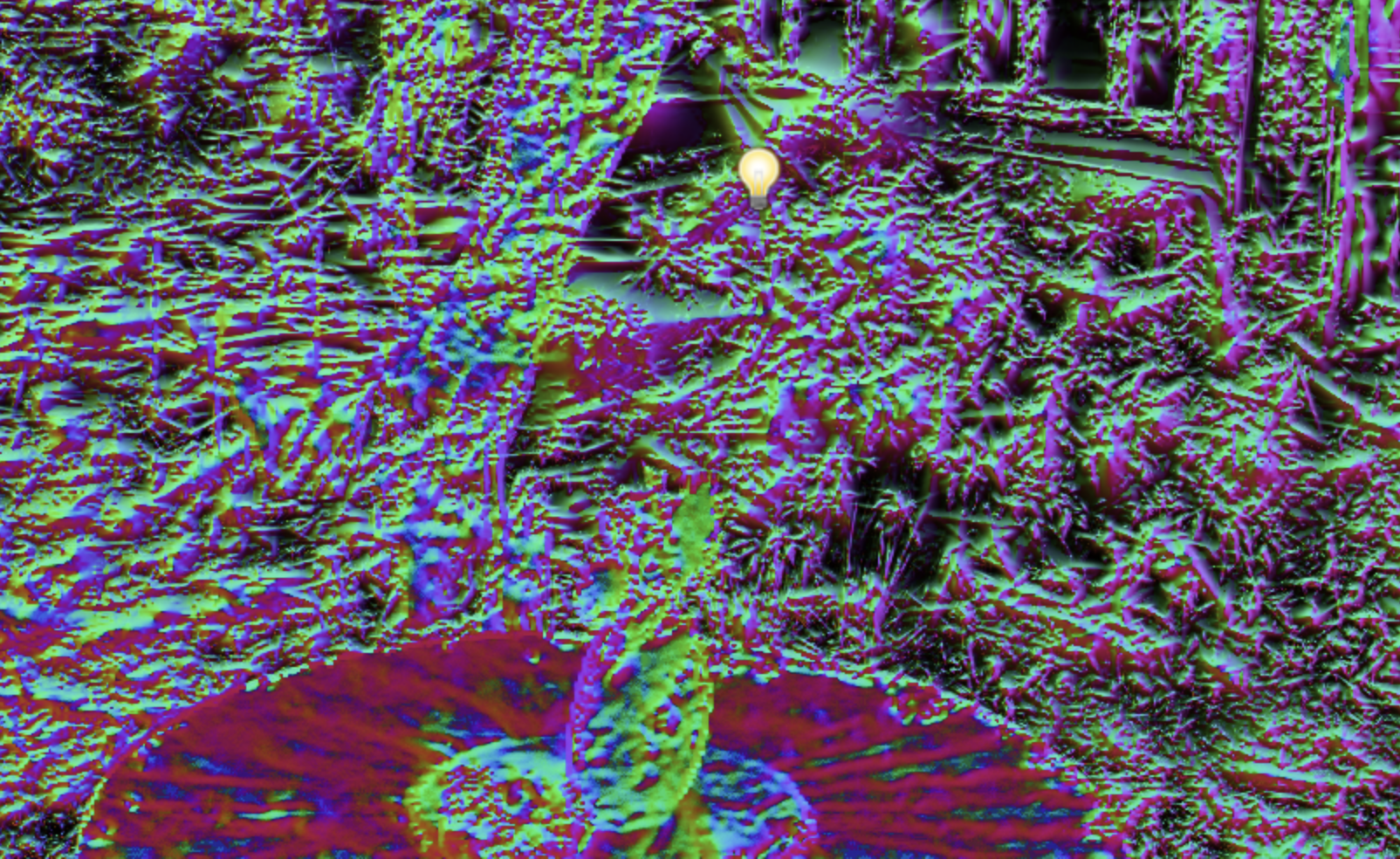

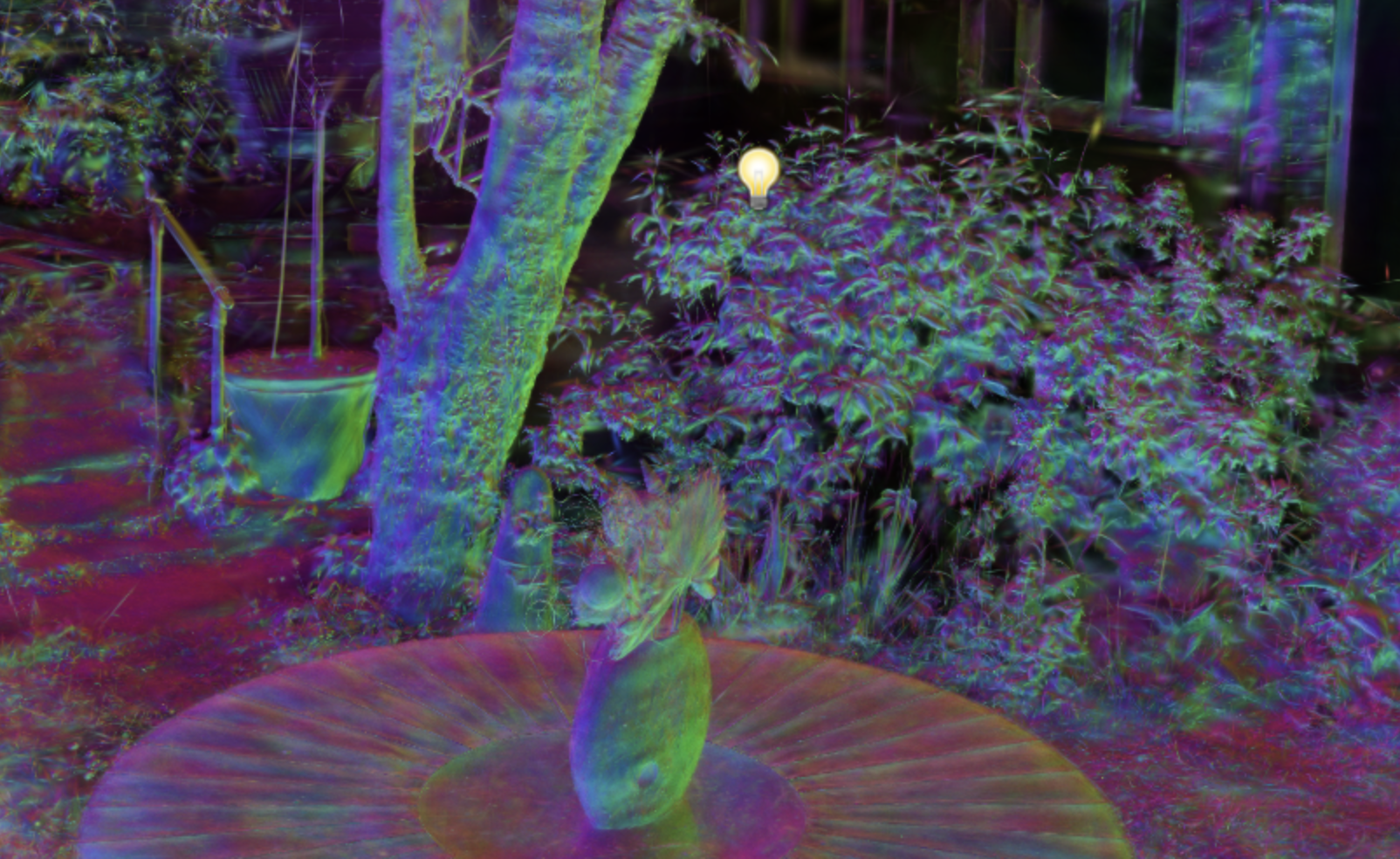

Because Gaussian splat reconstructions are fuzzy representations optimized to look right on screen rather than form coherent surfaces, the resulting depth is quite noisy, even if the overall shape is captured decently, resulting in bumpy-looking surfaces. As the Twitter thread suggested, we can mitigate the bumpiness somewhat by running a bilateral filter over depth before normal recovery. A bilateral filter is a noise-reducing filter which is similar to a Gaussian blur, but which also preserves edges in the image. The idea is to get a noise-free depth image which still contains accurate object shapes.

In some splat reconstructions, the underlying geometry is still too bumpy. For example, in the Mip-NeRF360

Can we improve the geometry reconstructed during training? A few papers do this. Relightable 3D Gaussians

The explicit normals make a huge difference. Compare the same scene reconstructed via Relightable Gaussians but rendered with pseudo-normals vs. explicit normals:

The R3DG paper's reconstructed materials can be used for physically-based re-rendering of objects

Ideas for improvement

Following are my scattered thoughts as someone still pretty new to graphics programming.

The surface-based shading technique I used works suprisingly well. Since most of what we see in gaussian splat scenes are hard objects with well-defined boundaries, we're able to recover a fairly accurate surface, which is enough for us to perform traditional lighting. What I built so far only has basic shading and shadow-mapping but could be easily extended with more advanced lighting techniques to provide global illumination and PBR.

One thing this approach doesn't handle well is fuzzy surfaces and objects like grass. In splat reconstructions today these are usually represented with many scattered, mixed size and lower density gaussians, which look terrible with surface-based shading because the recovered surface is so bumpy. How could we improve how these look?

- A common technique to render volumes like smoke or fog in real-time is to use ray-marching. We intentionally march multiple steps inside a volume and measure the light at each step to approximate the color reflected along the ray. Lighting can be made fast with techniques like caching lighting in a voxel grid or deep shadow maps.

- Gaussian splats could be rendered using ray-marching. But ray-marching within each gaussian probably wouldn't help because most of these gaussians are so small that light doesn't vary much within the volume. So it's enough to take a single sample, which is what rasterization is doing. Ray-marching is also a lot slower than rasterization and we'd be trading off performance just so that the few fuzzy parts of a scene look better.

- What we really want here is more accurate light reflection, firstly because there is no “surface” within these fuzzy volumes and secondly because we want to capture the fact that light continues to travel into the volume. The first problem seems trickier to solve because we'd need some way of deciding whether an individual gaussian is part of a surface or not.

There are applications which render objects as fuzzy volumes or point clouds with lighting, animations, etc. successfully at massive scale.

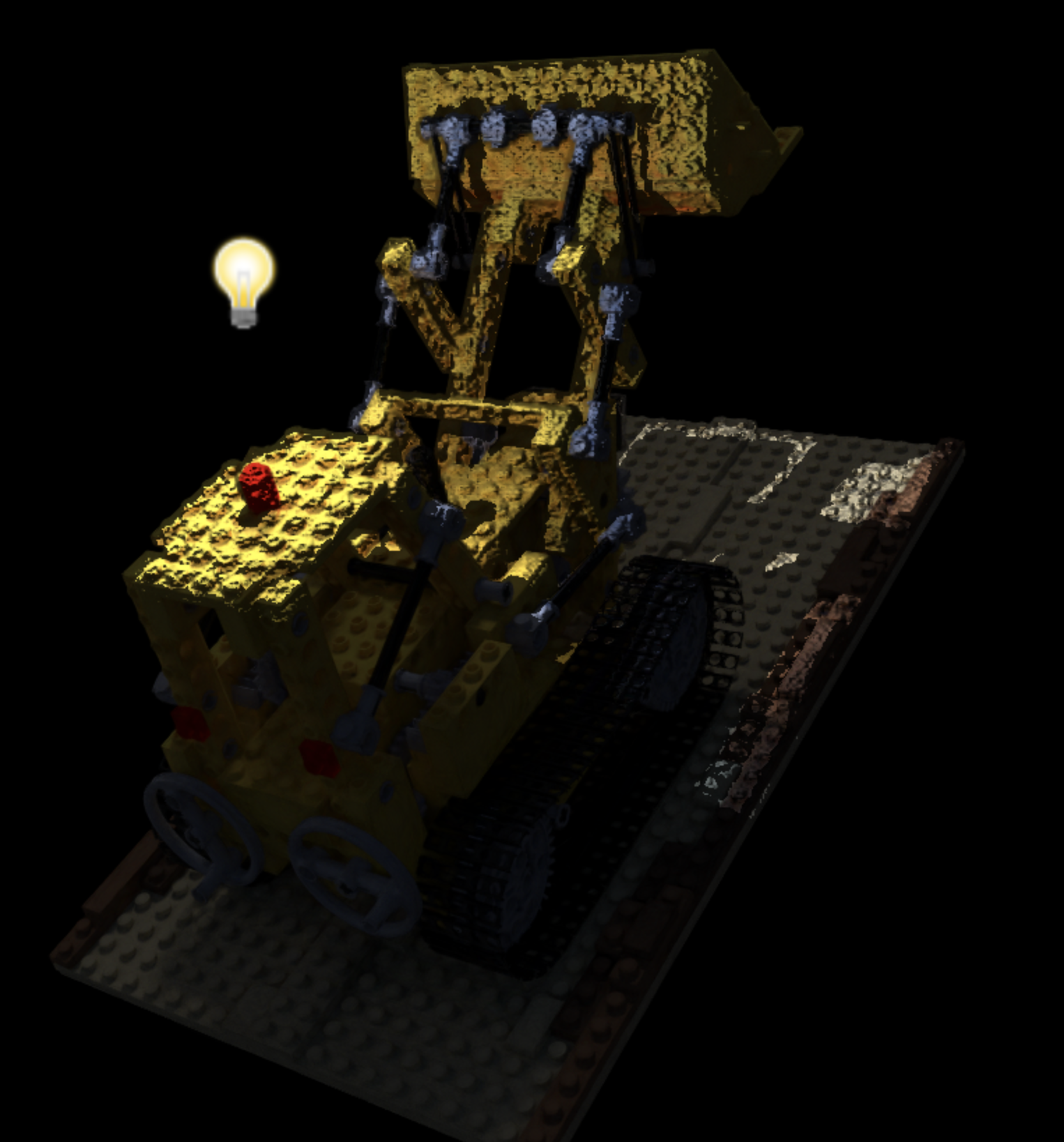

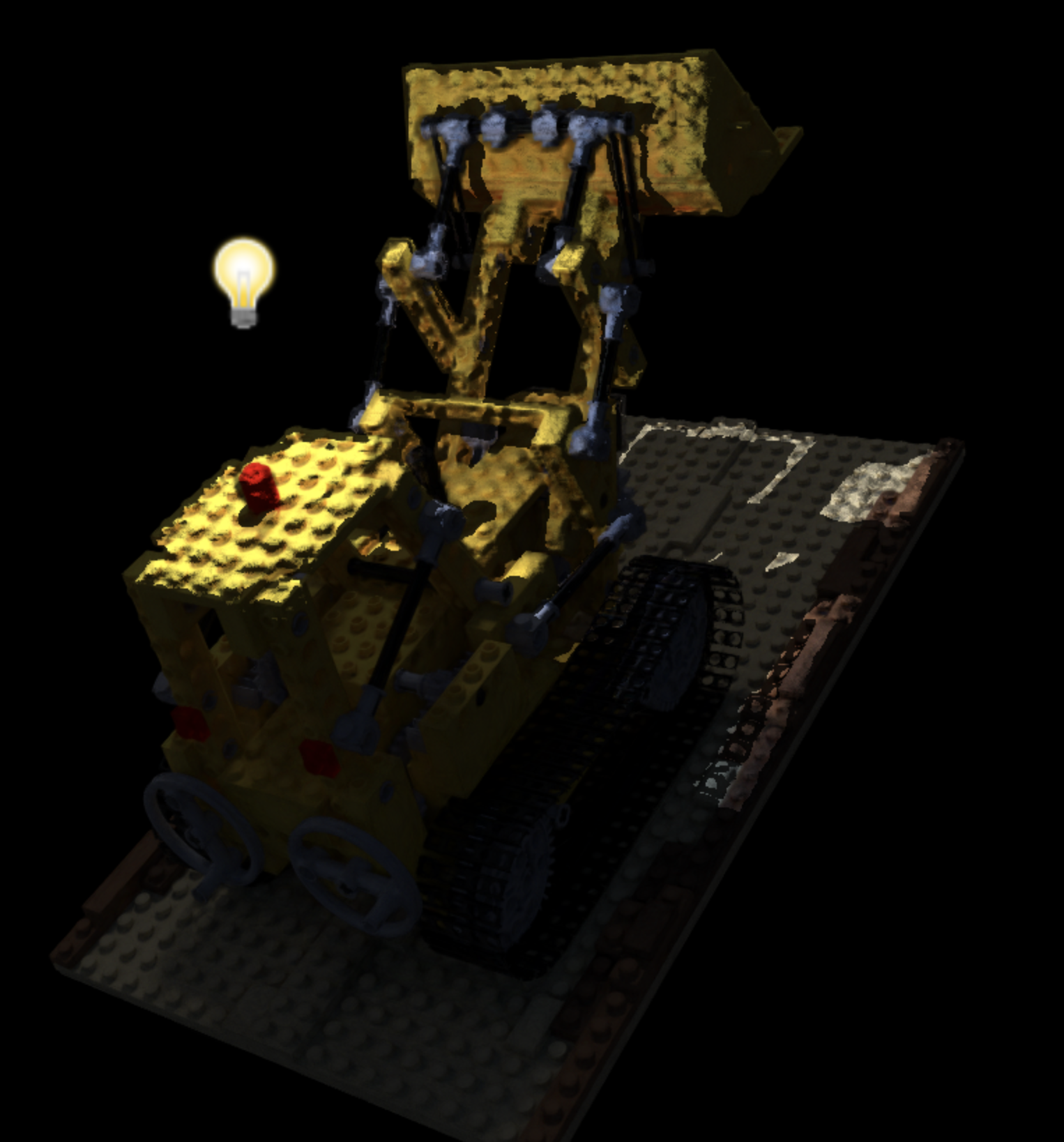

One example is Dreams, which came out in 2020 for the PS4 and which used a combination of signed

distance fields and point clouds to represent user-generated content. The use of these alternate graphics primitives gave the game a unique "painterly" look and

feel

Dreams appears to use something much more like surface-based shading.

Alex Evan's SIGGRAPH 2015 talk

things evolved quite a bit since the siggraph preso. the E3 trailer from that era was rendered using the atomic splat renderer mentioned at the end of the talk, and it's still my favourite 'funky tech' wise, however it suffered because everything had microscopic holes! ... why is this a big problem? because this broke occlusion culling, which we rely on heavily for performance ...

so to sum up: dreams has hulls and fluff; hulls are what you see when you see something that looks 'tight' or solid; and the fluff is the painterly stuff. the hulls are rendered using 'raymarching' of a signed distance field (stored in bricks), (rather than splatted as they were before). then we splat fluffy stuff on top, using lots of layers of stochastic alpha to make it ... fluffy :) this all generates a 'classic' g-buffer, and the lighting is all deferred in a fairly vanilla way (GGX specular model).

Why?

What's the point of all of this? If we want dynamic lighting in scenes captured by Gaussian splats, why don't we just convert the splats to a mesh representation and relight that?

If we convert to meshes, we can also incorporate splats into existing mesh-based workflows and renderers rather than building out specialized paths. This would be awesome for

industry adoption of splats. Existing mesh-based renderers are also optimized to the hilt.

Geometry systems like

Unreal Engine 5's Nanite already make it possible to use extremely detailed, high-poly real-world captures via photogrammetry scans in videogames today.

Gaussian splats make it even easier to get these real-world captures. So there is lots of interest here. Papers like SuGaR

That said working with point cloud and splat representations directly have some advantages. While meshing a splat representation is not too difficult, it takes extra time and resources, and the result is not guaranteed to be accurate. It's also sometimes easier to work with splats, for example to give scenes artistic flair like in Dreams. For similar reasons, SuGaR and Gaussian Frosting propose a hybrid rendering solution where objects are rendered as meshes with a thin layer of Gaussian splats attached to the surface to capture fuzzy details like fur.

Overall, while Gaussian splatting was a breakthrough technology for fast and accurate 3D reconstruction, we're still discovering what the splat format will be useful for beyond just “3D photos”. Time will tell if splats will be useful mainly as an intermediate format that we get from real-world captures, or if they'll be everywhere in future games and 3D design software. In the meantime research into rendering splats continues. Some interesting papers:

- Animation: Animatable Gaussians, GAvatar

- Lighting: Relightable 3D Gaussian, GaussianShader

- Modeling fuzzy things: Gaussian Frosting

- Rendering larger scenes faster: CityGaussian, RadSplat